TLDR

In the context of generative modeling, we examine ODEs, SDEs, and two recent works that share the idea of learning shortcuts that traverse through vector fields defined by ODEs faster. We then discuss the generalization of this idea to both ODE- and SDE-based models.

Differential Equations

Let’s start with a general scenario of generative modeling: suppose you want to generate data that follows a distribution . In many cases, the exact form of is unknown. What you can do is follow the idea of normalizing flow1: start from a very simple, closed-form distribution (for example, a standard normal distribution), transform this distribution through time with intermediate distributions , and finally obtain the estimated distribution . By doing this, you are essentially trying to solve a differential equation (DE)2 that depends on time:

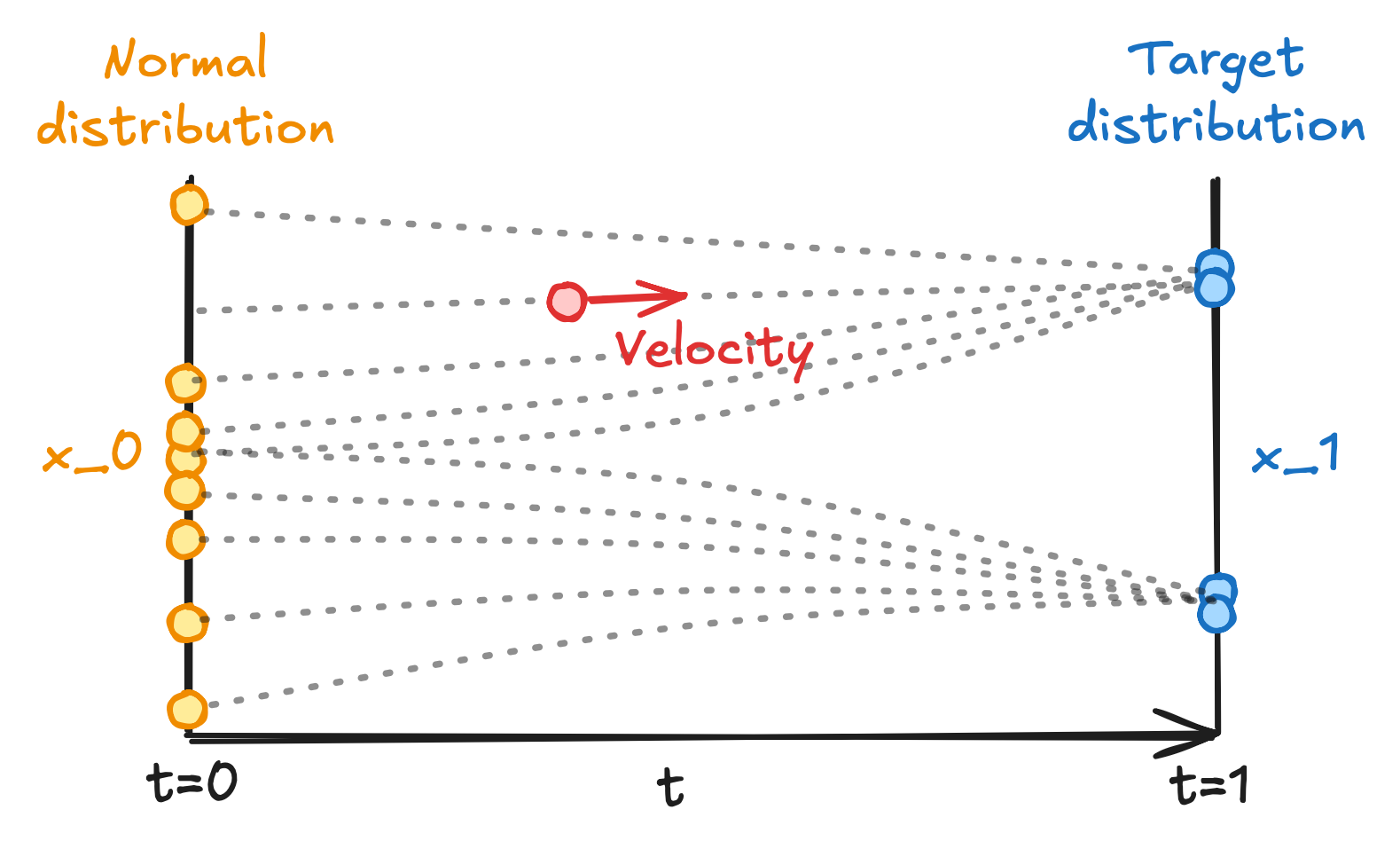

where is the drift component that is deterministic, and is the diffusion term driven by Brownian motion3 (denoted by ) that is stochastic. This differential equation specifies a time-dependent vector (velocity) field4 telling how a data point should be moved as time evolves from to (i.e., a flow5 from to ). Below we give an illustration where is 1-dimensional:

Vector field between two distributions specified by a differential equation.

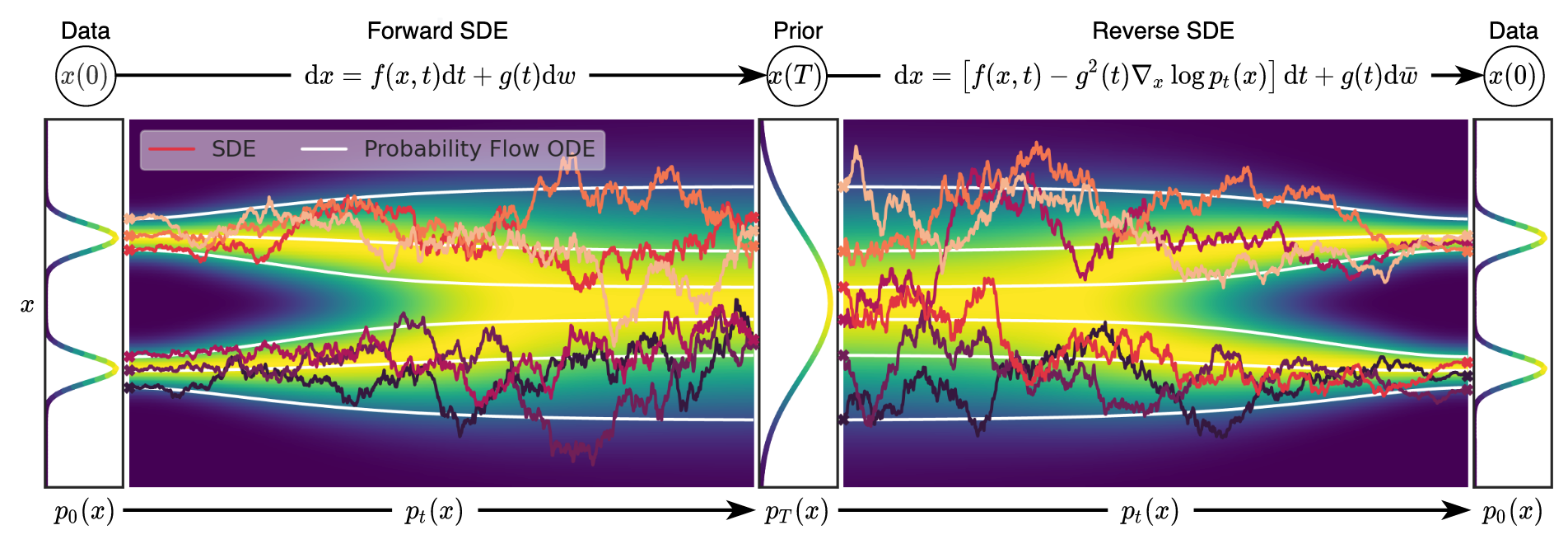

When , we get an ordinary differential equation (ODE)6 where the vector field is deterministic, i.e., the movement of is fully determined by and . Otherwise, we get a stochastic differential equation (SDE)7 where the movement of has a certain level of randomness. Extending the previous illustration, below we show the difference in flow of under ODE and SDE:

Difference of movements in vector fields specified by ODE and SDE. Source: Song, Yang, et al. “Score-based generative modeling through stochastic differential equations.” Note that their time is reversed.

As you would imagine, once we manage to solve the differential equation, even if we still cannot have a closed form of , we can sample from by sampling a data point from and get the generated data point by calculating the following forward-time integral with an integration technique of our choice:

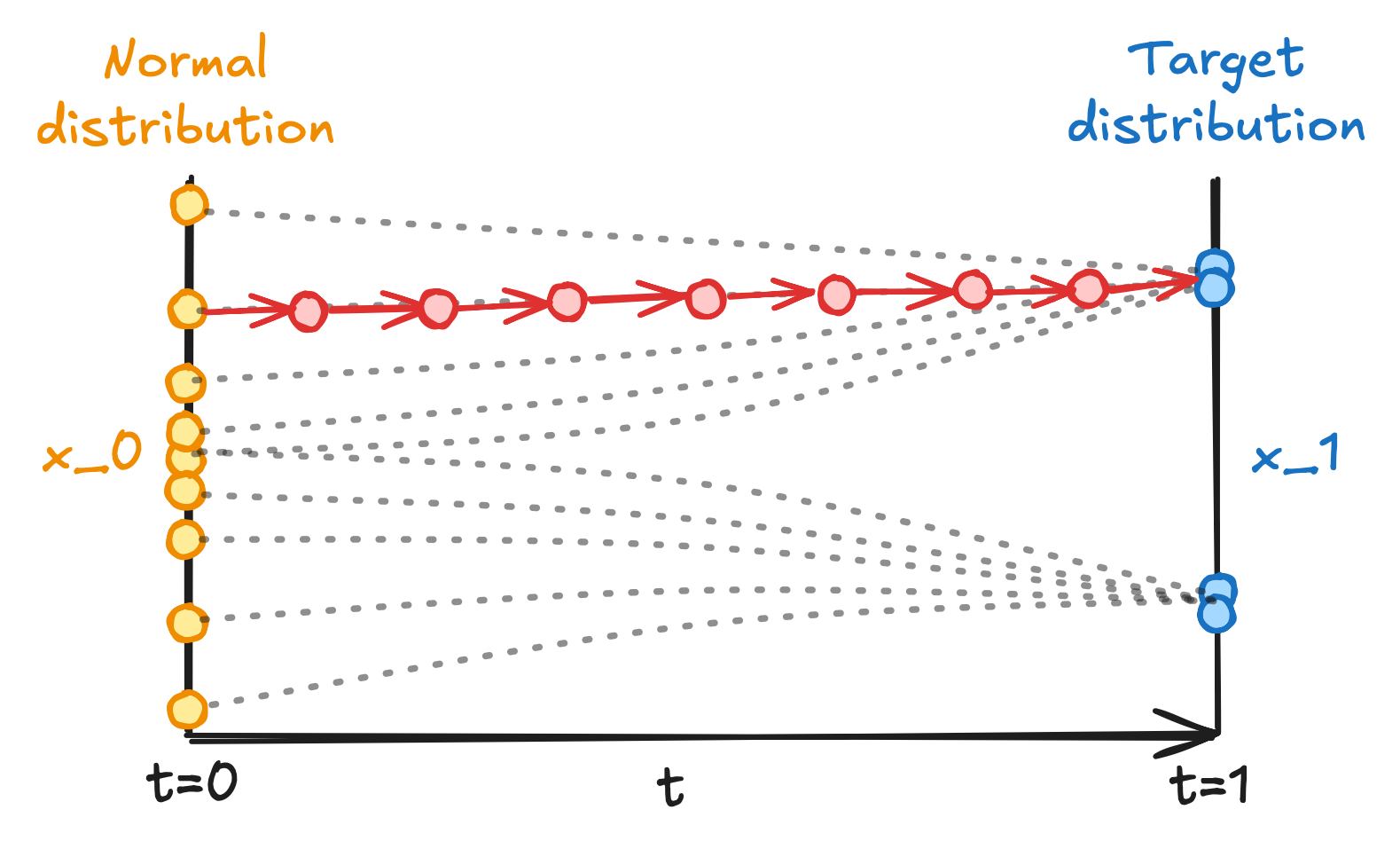

Or more intuitively, moving towards along time in the vector field:

A flow of data point moving from towards in the vector field.

ODE and Flow Matching

ODE in Generative Modeling

For now, let’s focus on the ODE formulation since it is notationally simpler compared to SDE. Recall the ODE of our generative model:

Essentially, is the vector field. For every possible combination of data point and time , is the instantaneous velocity in which the point will move. To generate a data point , we perform the integral:

To calculate this integral, a simple and widely adopted method is the Euler method8. Choose time steps , and for each integral step :

In other words, we discretize the time span into time steps, and for each step the data is moved based on the instantaneous velocity at the current step.

Note

There are other methods to calculate the integral, of course. For example, one can use the solvers in the

torchdiffeqPython package9.

Flow Matching

In many scenarios, the exact form of the vector field is unknown. The general idea of flow matching10 is to find a ground truth vector field that defines the flow transporting to , and build a neural network that is trained to match the ground truth vector field, hence the name. In practice, this is usually done by independently sampling from the noise and from the training data, calculating the intermediate data point and the ground truth velocity , and minimizing the deviation between and .

Ideally, the ground truth vector field should be as straight as possible, so we can use a small number of steps to calculate the ODE integral. Thus, the ground truth velocity is usually defined following the optimal transport flow map:

And a neural network is trained to match the ground truth vectors as:

Curvy Vector Field

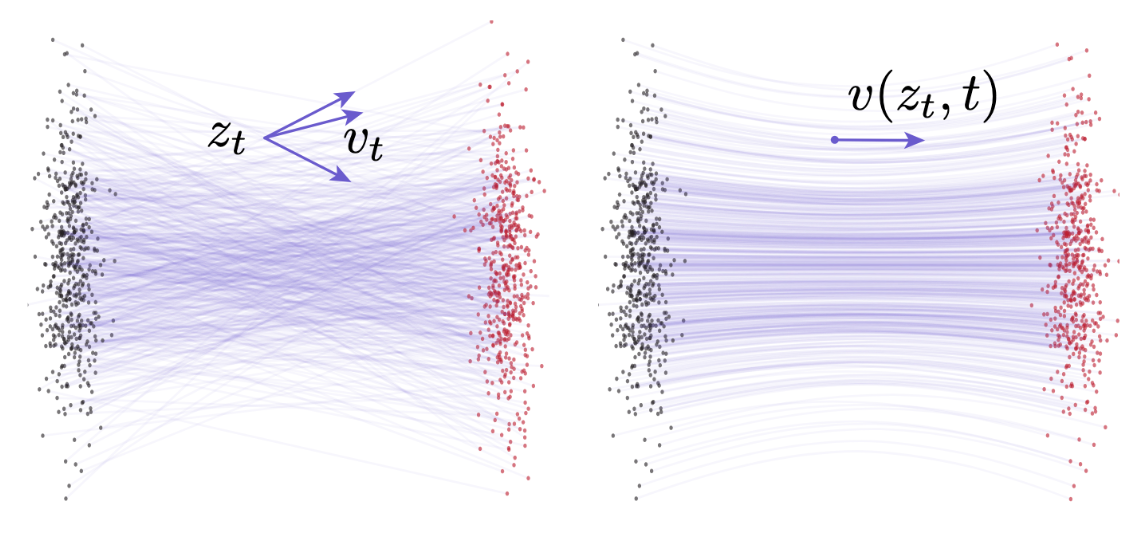

Although the ground truth vector field is designed to be straight, in practice it usually is not. When the data space is high-dimensional and the target distribution is complex, there will be multiple pairs of that result in the same intermediate data point , thus multiple velocities . At the end of the day, the actual ground truth velocity at will be the average of all possible velocities that pass through . This will lead to a “curvy” vector field, illustrated as follows:

Left: multiple vectors passing through the same intermediate data point. Right: the resulting ground truth vector field. Source: Geng, Zhengyang, et al. “Mean Flows for One-step Generative Modeling.” Note and in the figure correspond to and in this post, respectively.

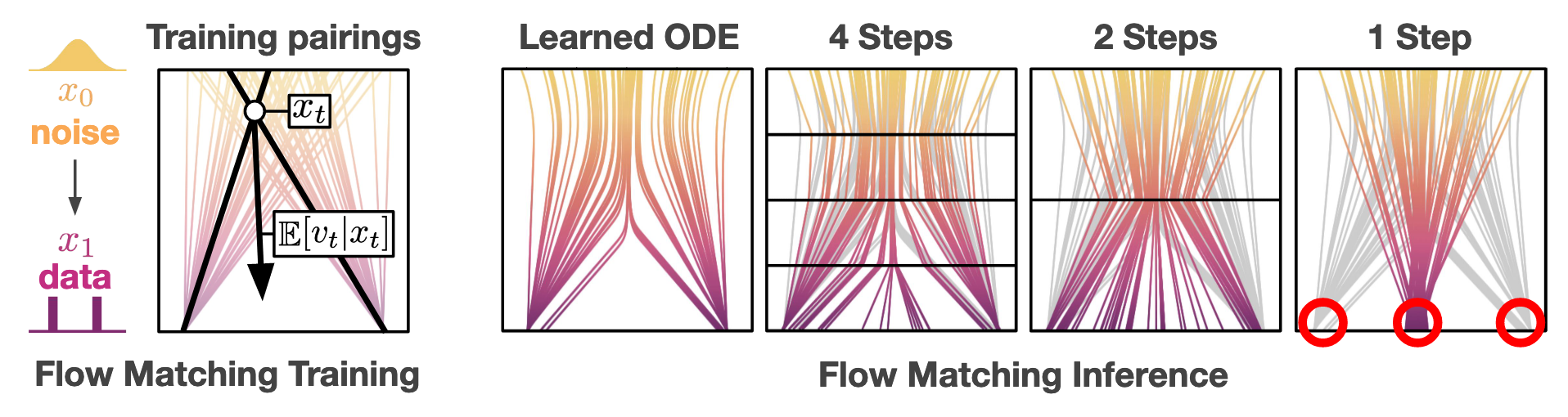

As we discussed, when you calculate the ODE integral, you are using the instantaneous velocity—tangent of the curves in the vector field—of each step. You would imagine this will lead to subpar performance when using a small number of steps, as demonstrated below:

Native flow matching models fail at few-step sampling. Source: Frans, Kevin, et al. “One step diffusion via shortcut models.”

Shortcut Vector Field

If we cannot straighten the ground truth vector field, can we tackle the problem of few-step sampling by learning velocities that properly jump across long time steps instead of learning the instantaneous velocities? Yes, we can.

Shortcut Models

Shortcut models11 implement the above idea by training a network to match the velocities that jump across long time steps (termed shortcuts in the paper). A ground truth shortcut will be the velocity pointing from to , formally:

Ideally, you can transform to within one step with the learned shortcuts:

Note

Of course, in practice shortcut models face the same problem mentioned in the Curvy Vector Field: the same data point corresponds to multiple shortcut velocities to different data points , making the ground truth shortcut velocity at the average of all possibilities. So, shortcut models have a performance advantage with few sampling steps compared to conventional flow matching models, but typically don’t have the same performance with one step versus more steps.

The theory is quite straightforward. The tricky part is in the model training. First, the network expands from learning all possibilities of velocities at to all velocities at with . Essentially, the shortcut vector field has one more dimension than the instantaneous vector field, making the learning space larger. Second, calculating the ground truth shortcut involves calculating integral, which can be computationally heavy.

To tackle these challenges, shortcut models introduce self-consistency shortcuts: one shortcut with step size should equal two consecutive shortcuts both with step size :

The model is then trained with the combination of matching instantaneous velocities and self-consistency shortcuts as below. Notice that we don’t train a separate network for matching the instantaneous vectors but leverage the fact that the shortcut is the instantaneous velocity when .

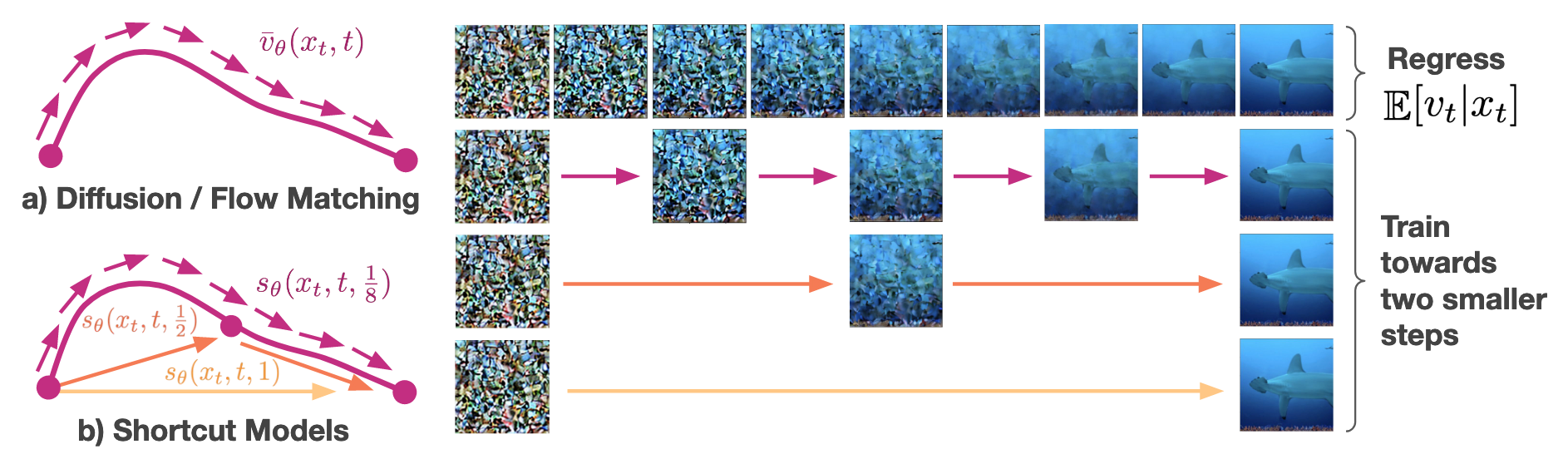

Where is stop gradient, i.e., detach from back propagation, making it a pseudo ground truth. Below is an illustration of the training process provided in the original paper.

Training of the shortcut models with self-consistency loss.

Mean Flow

Mean flow12 is another work sharing the idea of learning velocities that take large step size shortcuts but with a stronger theoretical foundation and a different approach to training.

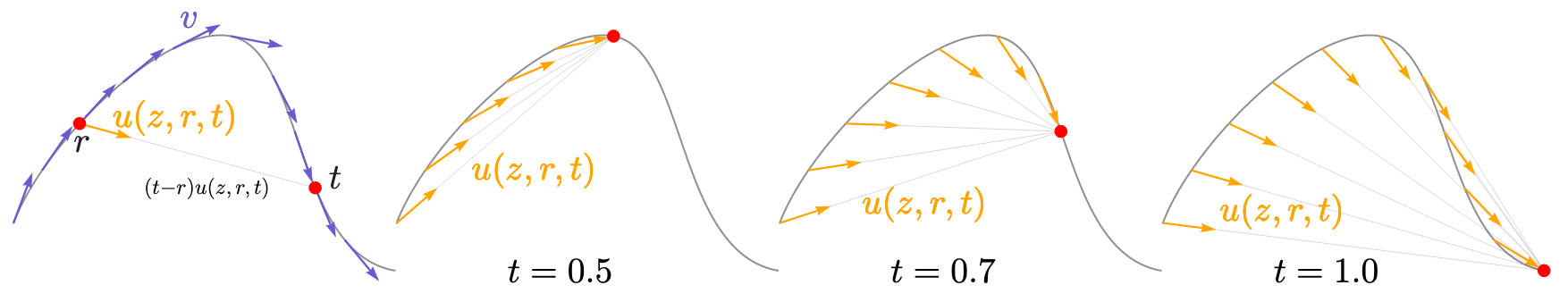

Illustration of the average velocity provided in the original paper.

Mean flow defines an average velocity as a shortcut between times and where and are independent:

This average velocity is essentially equivalent to a shortcut in shortcut models given . What differentiates mean flow from shortcut models is that mean flow aims to provide a ground truth of the vector field defined by , and directly train a network to match the ground truth.

We transform the above equation by differentiate both sides with respect to and rearrange components, and get:

We get the average velocity on the left, and the instantaneous velocity and the time derivative components on the right. This defines the ground truth average vector field, and our goal now is to calculate the right side. We already know that the ground truth instantaneous velocity . To compute the time derivative component, we can expand it in terms of partial derivatives:

From the ODE definition , and . Since and are independent, . Thus, we have:

This means the time derivative component is the vector product between and . In practice, this can be computed using the Jacobian vector product (JVP) functions in NN libraries, such as the torch.func.jvp function in PyTorch. In summary, the mean flow loss function is:

Notice that the JVP computation inside is performed with the network itself. In this regard, this loss function shares a similar idea with the self-consistency loss in shortcut models—supervising the network with output produced by the network itself.

Note

While the loss function of mean flow is directly derived from the integral definition of shortcuts/average velocities, the self-consistency loss in shortcut models is also implicitly simulating the integral definition. If we expand a shortcut following the idea of self-consistency recursively by times:

When we essentially get the integral definition.

Extended Reading: Rectified Flow

Both shortcut models and mean flow are built on top of the ground truth curvy ODE field. They don’t modify the field , but rather try to learn shortcut velocities that can traverse the field with fewer Euler steps. This is reflected in their loss function design: shortcut models’ loss function specifically includes a standard flow matching component, and mean flow’s loss function is derived from the relationship between vector fields and .

Rectified flow13, another family of flow matching models that aims to achieve one-step sampling, is fundamentally different in this regard. It aims to replace the original ground truth ODE field with a new one with straight flows. Ideally, the resulting ODE field has zero curvature, enabling one-step integration with the simple Euler method. This usually involves augmentation of the training data and a repeated reflow process.

We won’t discuss rectified flow in further detail in this post, but it’s worth pointing out its difference from shortcut models and mean flow.

SDE and Score Matching

SDE in Generative Modeling

SDE, as its name suggests, is a differential equation with a stochastic component. Recall the general differential equation we introduced at the beginning:

In practice, the diffusion term usually only depends on , so we will use the simpler formula going forward:

is the Brownian motion (a.k.a. standard Wiener process). In practice, its behavior over time can be described as . This is the source of SDE’s stochasticity, and also why people like to call the family of SDE-based generative models diffusion models14, since Brownian motion is derived from physical diffusion processes15.

In the context of generative modeling, the stochasticity in SDE means it can theoretically handle augmented data or data that is stochastic in nature (e.g., financial data) more gracefully. Practically, it also enables techniques such as stochastic control guidance16. At the same time, it also means SDE is mathematically more complicated compared to ODE. We no longer have a deterministic vector field specifying flows of data points moving towards . Instead, both and have to be designed to ensure that the SDE leads to the target distribution we want.

To solve the SDE, similar to the Euler method used for solving ODE, we can use the Euler-Maruyama method17:

In other words, we move the data point guided by the velocity plus a bit of Gaussian noise scaled by .

Score Matching

In SDE, the exact form of the vector field is still (quite likely) unknown. To solve this, the general idea is consistent with flow matching: we want to find the ground truth and build a neural network to match it.

Score matching models18 implement this idea by parameterizing as:

where is a velocity similar to that in ODE, and is the score (a.k.a. informant)19 of . Without going too deep into the relevant theories, think of the score as a “compass” that points in the direction where becomes more likely to belong to the distribution . The beauty of introducing the score is that depending on the definition of ground truth , the velocity can be derived from the score, or vice versa. Then, we only have to focus on building a learnable score function to match the ground truth score using the loss function below, hence the name score matching:

For example, if we have time-dependent coefficients and (termed noise schedulers in most diffusion models), and define that follows the distribution given a clean data point :

then we will have:

Some works14 also propose to re-parameterize the score function with noise sampled from a standard normal distribution, so that the neural network can be a learnable denoiser that matches the noise rather than the score. Since , both approaches are equivalent.

Shortcuts in SDE

Most existing efforts sharing the idea of shortcut vector fields are grounded in ODEs. However, given the correlations between SDE and ODE, learning an SDE that follows the same idea should be straightforward. Generally speaking, SDE training, similar to ODE, focuses on the deterministic drift component . One should be able to, for example, use the same mean flow loss function to train a score function for solving an SDE.

Note

Needless to say, generalizing shortcut models and mean flow to flow matching models with ground truth vector fields other than optimal transport flow requires no modification either, since most such models (e.g., Bayesian flow) are ultimately grounded in ODE.

One caveat of training a “shortcut SDE” is that the ideal result of one-step sampling contradicts the stochastic nature of SDE—if you are going to perform the sampling in one step, you are probably better off using ODE to begin with. Still, I believe it would be useful to train an SDE so that its benefits versus ODE are preserved, while still enabling the lowering of sampling steps for improved computational efficiency.

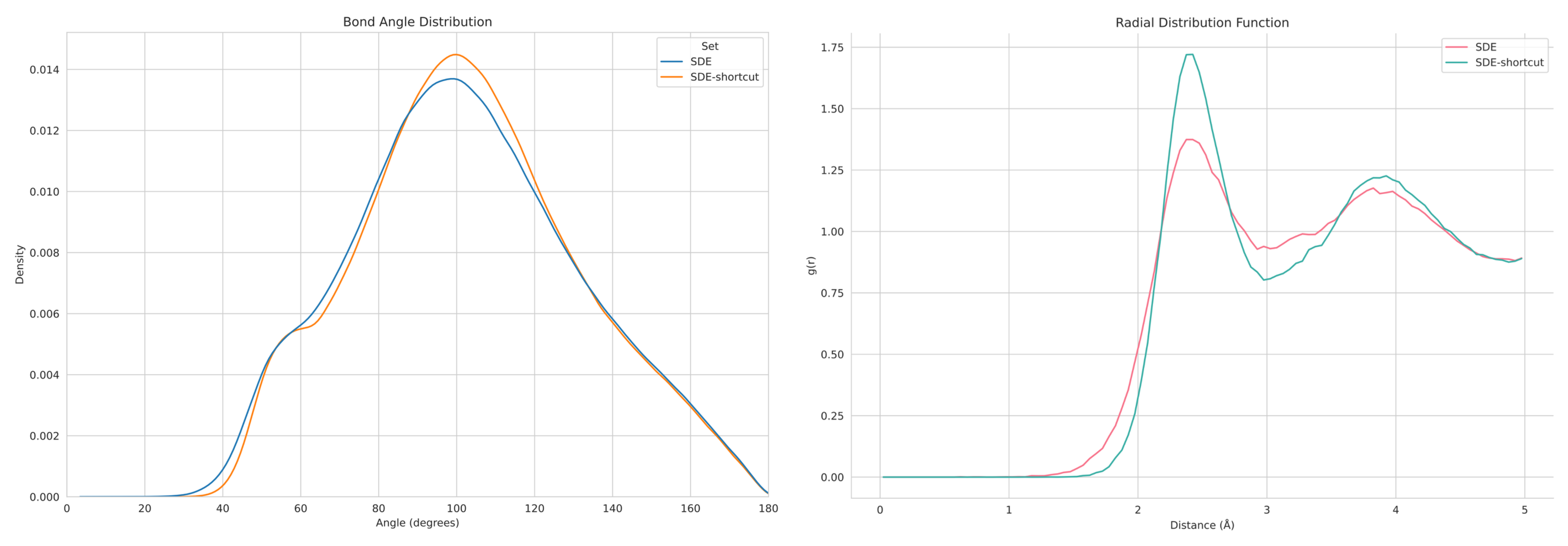

Below are some preliminary results I obtained from a set of amorphous material generation experiments. You don’t need to understand the figure—just know that it shows that applying the idea of learning shortcuts to SDE does yield better results compared to the vanilla SDE when using few-step sampling.

Structural functions of generated materials, sampled in 10 steps.

References

- Holderrieth and Erives, “An Introduction to Flow Matching and Diffusion Models.”

- Song and Ermon, “Generative Modeling by Estimating Gradients of the Data Distribution.”

Footnotes

-

Rezende, Danilo, and Shakir Mohamed. “Variational inference with normalizing flows.” ↩

-

https://en.wikipedia.org/wiki/Ordinary_differential_equation ↩

-

https://en.wikipedia.org/wiki/Stochastic_differential_equation ↩

-

Lipman, Yaron, et al. “Flow matching for generative modeling.” ↩

-

Frans, Kevin, et al. “One step diffusion via shortcut models. ↩

-

Geng, Zhengyang, et al. “Mean Flows for One-step Generative Modeling.” ↩

-

Liu, Xingchao, Chengyue Gong, and Qiang Liu. “Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow.” ↩

-

Ho, Jonathan, Ajay Jain, and Pieter Abbeel. “Denoising diffusion probabilistic models.” ↩ ↩2

-

Huang et al., “Symbolic Music Generation with Non-Differentiable Rule Guided Diffusion.” ↩

-

Song et al., “Score-Based Generative Modeling through Stochastic Differential Equations.” ↩